In this article we want to explain how to install an SAP Datahub into an Open Source infrastructure and what’s important to consider. This is the first part, which deals with the underlying container- and storage-system. You can skip this part if you already know all essentials about Kubernetes, container networks and dynamic volume provisioning and move on directly to part 2: The Datahub installation itself. Otherwise you are welcome to start right here!

Since SAP officially releases only Enterprise Kubernetes products like SUSE CaaS Platform or RedHat OpenShift to use for SAP Datahub installations on-premise, we will give you a short overview on how to get the Datahub running on an officially unsupported, but free to use Kubernetes installation. Whether your company is just beginning to look into the Datahub world or needs it for developing purposes, the recommended approach by SAP will sooner or later require a big financial investment, even just for the containerized infrastructure behind. An implementation as described in this article could offer a solution to increase your know-how with these products and save money. Kubernetes itself is an Open Source product developed by Google, for non-productive environments, which is why there is no need for any enterprise overhead, if you pay attention to some simple details.

Let’s start with a few basic thoughts and decisions on our project to prevent problems later on. There are several Open Source projects, which, besides the ones that must be paid for, also pursue the goal of implementing a simple and easy to handle Kubernetes infrastructure. So why don’t we use one of those? The problem is, that most of them immediately implement the newest versions of Kubernetes, Docker, Python and more components as soon as new versions are available, which is exactly what we don’t want. SAP is usually conservative in releasing its products for versions from other manufacturers, which is why the automatic updates might damage the implemented system. We therefore recommend installing Kubernetes directly from the Google repositories on your preferred Linux distribution and to perform the necessary configuration yourself, to keep control over the used versions. After testing the Datahub installation and playing with different distributions, the RedHat EL based CentOS 7 Linux has proven to be very stable in its performance and compatible with all of our requirements.

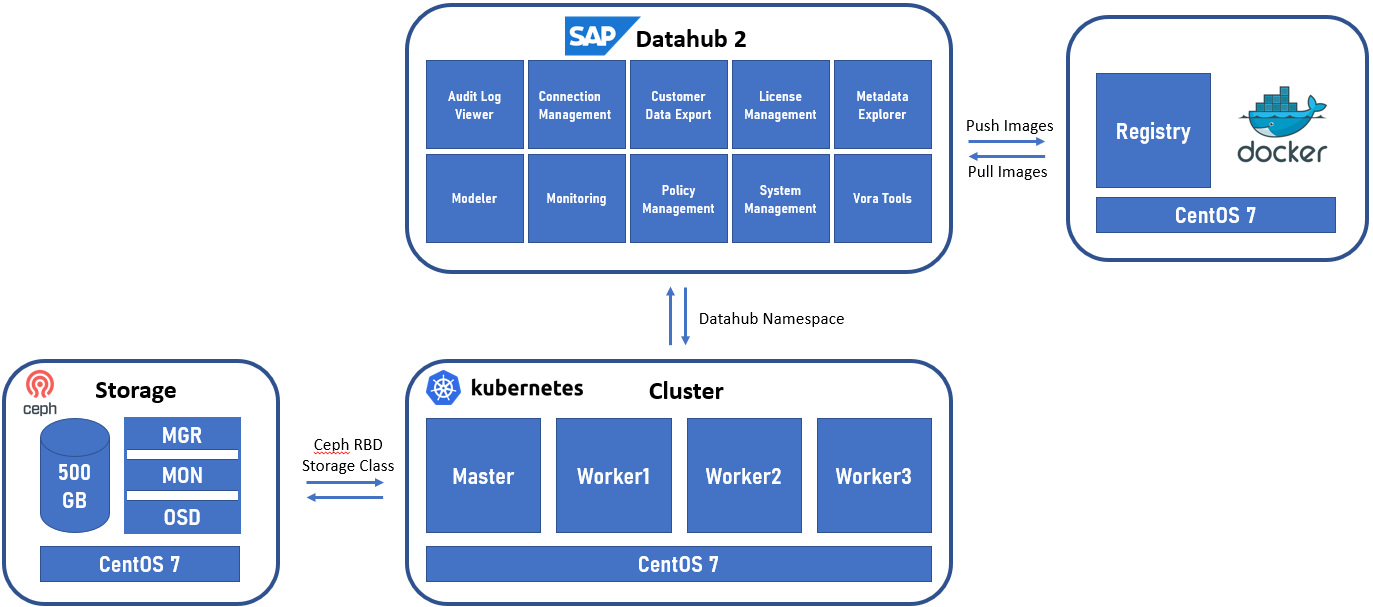

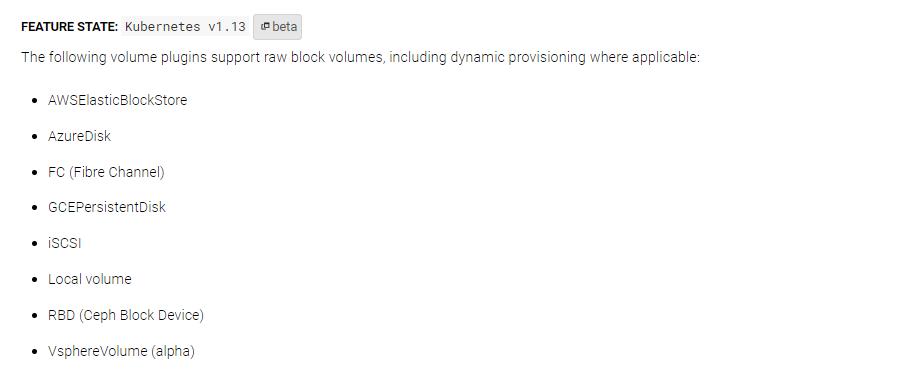

Apart from the container environment, a storage system that supports dynamic volume provisioning is of great importance for the Datahub installation. SAP recommends specific storage solutions in combination with corresponding container platforms. In our case, we also wanted to rely on Open Source, so we checked the Kubernetes documentation to figure out which solutions would be compatible.

Illustration 1: Kubernetes persistent volume storages

We had good experiences with Ceph RDB so far. You can find easy instructions for the installation online and the storage system can be adapted and scaled to the individual requirements. So, let’s go ahead and show what we are about to do.

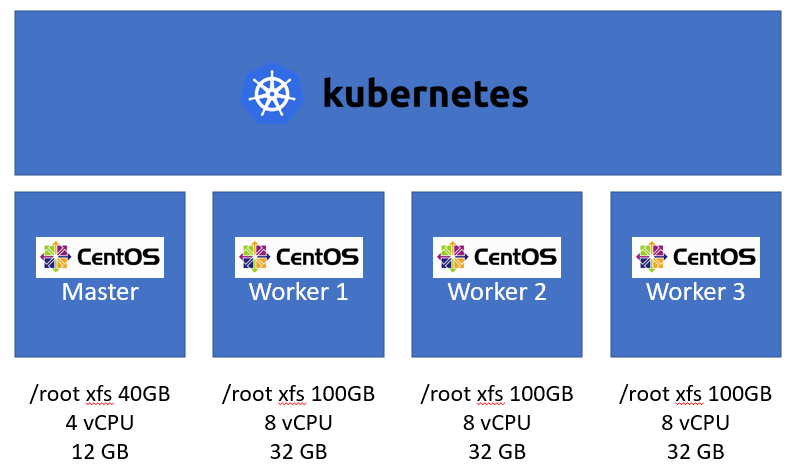

For the Kubernetes infrastructure we will use a cluster consisting of one master and three worker nodes. There is not really a need for high availability in our development installation and implementing more than one master node will increase complexity, that is why we decided to use this setup, which will still allow for a good computing performance through our three workers.

At this point, we would like to mention that the Datahub works with less hardware performance than we use for our installation. This depends on your individual needs and the resources available.

Make sure you are aware of the following checkpoints for the infrastructure installation:

- Install the base OS with minimal packages and disable the SWAP partition. We will configure 40GB /root for the master and 100GB for the worker nodes and use the LVM.

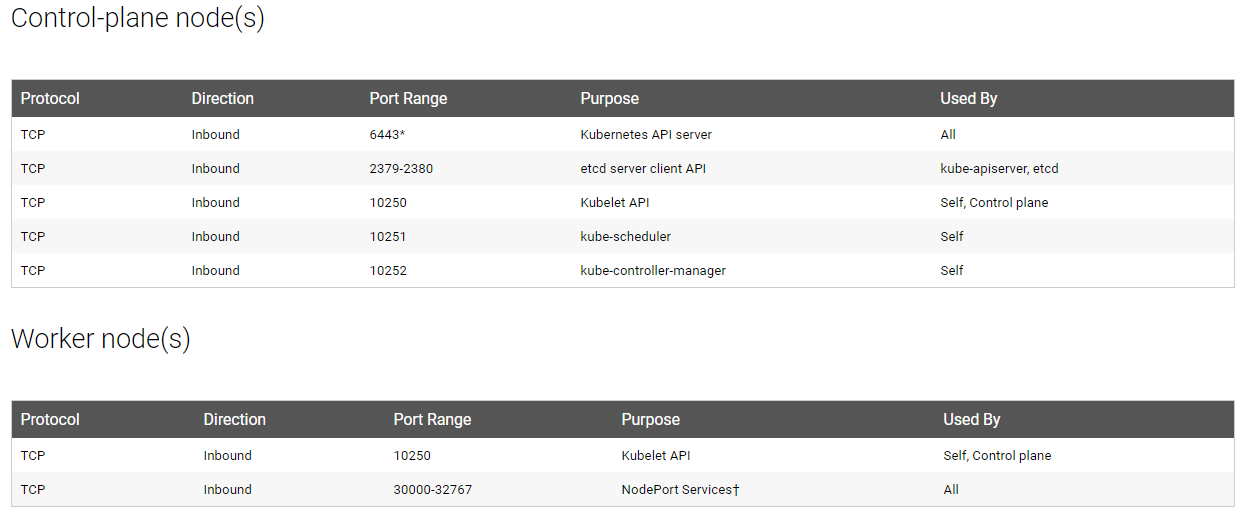

- Check firewall settings and configure the required rules.

Illustration 2: Kubernetes communication ports

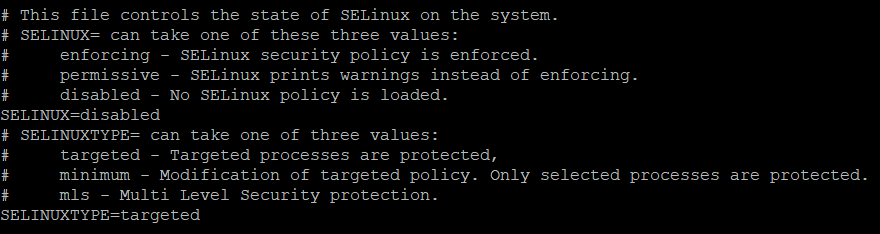

3. Edit the SELinux config and disable it:[root@datahubmaster ~]# vi /etc/selinux/config

4. Install the following components:

- Kubernetes v1.13

- Container.io and Docker

- Python 2.7 and python-yaml (required by the SAP Datahub)

- Ceph-common (required by the storage connection later)

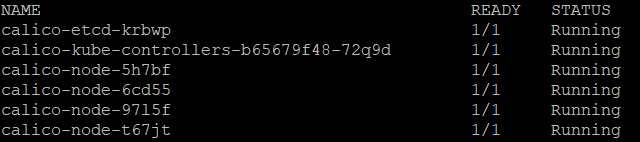

5. Bootstrap the cluster and implement a preferred network policy. As known for good performance and scalability, we used Calico. For more information, just follow the link.Your kube-system Namespace should now look something like this:

6. [root@datahubmaster ~]# kubectl -n kube-system get pods

7. Install Helm and deploy Tiller to your cluster, if everything works correctly, you will receive one more pod:

As mentioned above, we had good experience with Ceph RBD providing our dynamic storage. The installation is well described and easy to handle, when you follow the steps here. During the Datahub installation, several volumes are created, so make sure you provide at least 500GB of space.

Hint: The storage system can be deployed very large and scaled fail-safe, as well as redundant over several nodes. We think that for the time being it is sufficient to save resources by installing all components on one server.

After you put the storage unit into operation, deploy the corresponding Storage-Class to your cluster and check out, if test claims dynamically creates persistent volumes, for example with a test claim like that finding here.

[root@datahubmaster ~]# kubectl create -f ceph-test-claim.yml

[root@datahubmaster ~]# kubectl get pvc

If everything is working, you are good to move onto the next steps and install SAP Datahub through the Jumphost with Docker registry.

Contact Person