Data sets to be used in Machine Learning (ML) are usually unprocessed and immensely large in practice. Most ML algorithms require the input data in a specific form, which is provided by data preprocessing. Even then, however, the data model at hand is usually very complex.

Another problem with data sets can be the lack of balance between classes. In a classification project, the respective ML algorithm is fed a data set that has a class attribute in addition to regular attributes and an index. For example, in a customer churn analysis. Here, all tuples are provided with a value, whether the respective customer has churned or not, the class attribute. However, if the data set for the customer churn analysis is not balanced and, for example, only 20% of the customers churn, this can lead to inaccuracies in the resulting model with the ML algorithm used. In order to simultaneously counteract the problems of the high complexity of data sets and the lack of balance, autoencoders can be created with the help of the SAP Predictive Analysis Library (PAL).

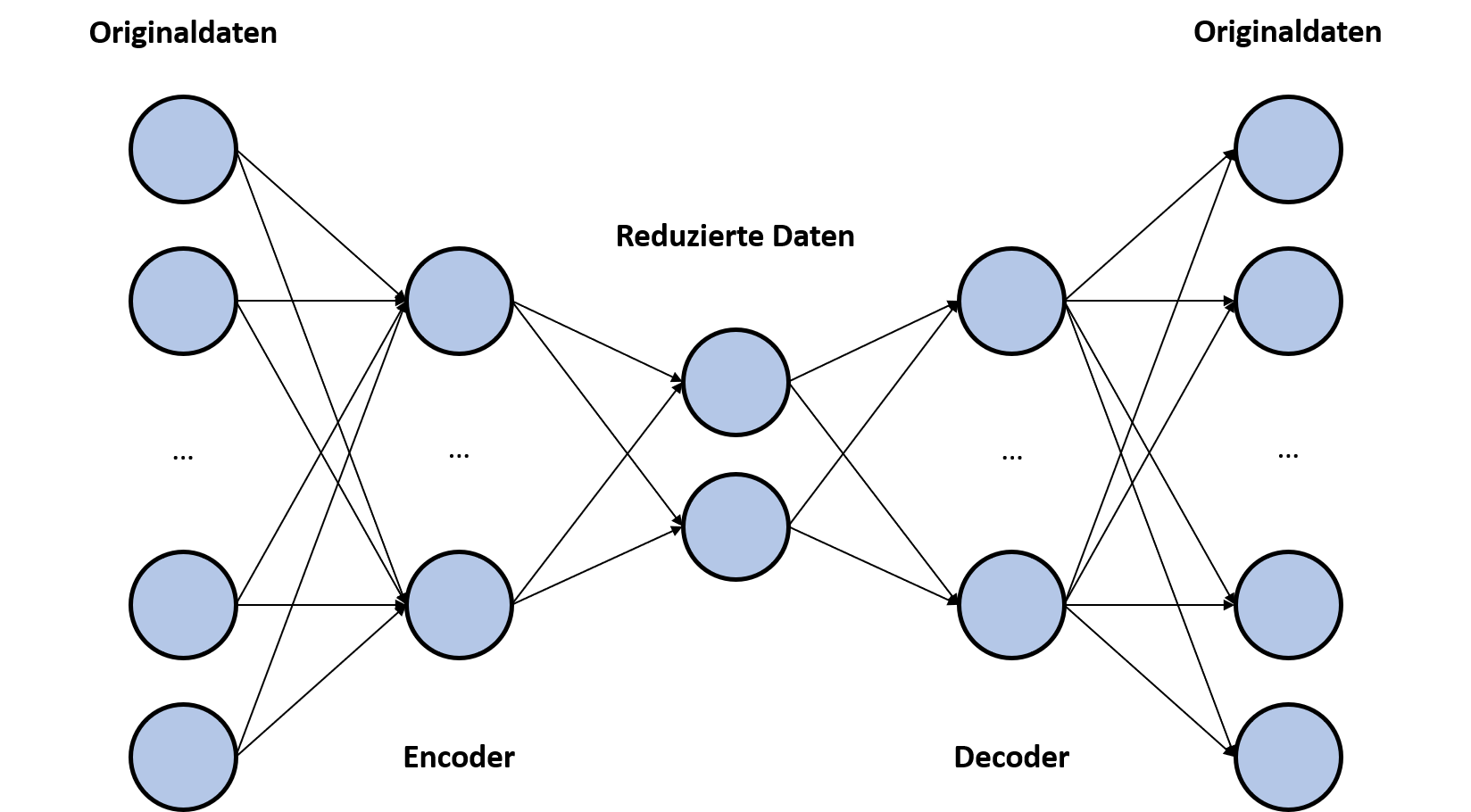

An autoencoder is an artificial neural network (KNN). As shown in Figure 1, an autoencoder consists of five elementary components. The original data, the encoder, the reduced data, the decoder and the reverse engineered original data. Unlike traditional KNNs, the target is not the last layer of the network, but the middle layer. When training the autoencoder, the original data is used both as input to the first layer and as the target for the last layer. In the remaining layers, the data is reduced, compressed and then mapped back as best as possible. In the middle layer of the KNN, there is thus a reduced form of the original data with fewer dimensions. Autoencoders are usually used for anomaly analysis and noise reduction (filtering of noise values/elements). (https://towardsdatascience.com/auto-encoder-what-is-it-and-what-is-it-used-for-part-1-3e5c6f017726)

Figure 1: Components of an autoencoder

In the scenario we worked on, a data set was used for customer churn analysis. Together with the index and the class attribute, the dataset has 21 attributes, such as gender, length of customer relationship, monthly cost, etc. Before the dataset was processed by SAP PAL, the attribute characteristics were scaled. Thus, all attributes were scaled between 0.3 and 0.7, except for the class attribute, which was scaled between 0 and 1 to focus on this attribute. A KNN was then created using SAP PAL based on the dataset. To create such a network, only the input data, a parameter table and an empty table for the resulting model are necessary. The KNN is then stored by SAP PAL in json format in the model table, divided into several rows of 5000 characters each. The model table was then exported and disassembled using simple means in Python to be imported back into the HANA database as separate model tables for the encoder and decoder. However, this step can also be performed as needed using SQL without exporting in the system itself. By using the PREDICT procedure of SAP PAL, the two models could be used. In this way, the 19-dimensional data model (21 minus index and class attribute) could be converted to a two-dimensional data set using the encoder.

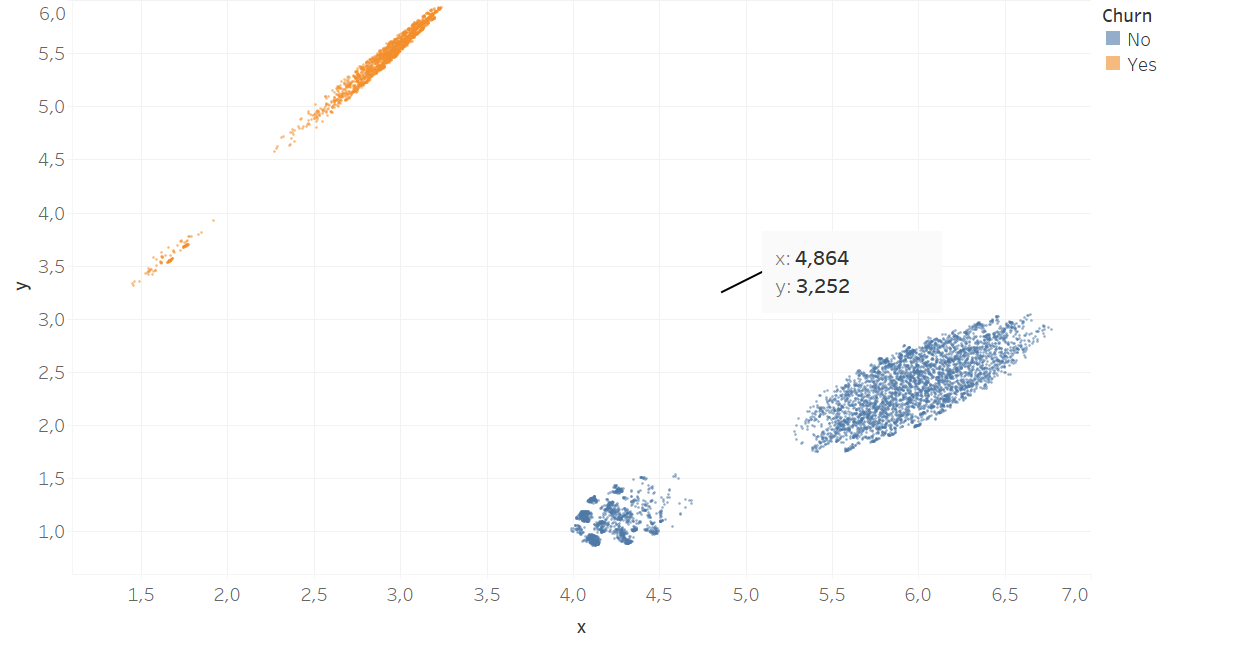

Figure 2 shows the data set used in coded form. In addition, a utility of the autoencoder is also shown. A random tuple was selected from the dataset and a feature expression was set to 13 (which is well above the scaled maximum 0.7), clearly representing an outlier, an anomaly. Using the PREDICT procedure, the two-dimensional expressions for this tuple could be calculated. It clearly stands out among the rest of the data points.

Another benefit of the autoencoder is also evident in Figure 2. Adding more tuples to the data set used is not a problem in the two-dimensional representation. If tuples of the class of churning customers are needed, they can simply be added to the corresponding cluster. This is particularly easy in this case because a focus was placed on the class attribute during the previous scaling and the tuples were thus automatically sorted strongly according to this by the KNN. If new tuples have been added as desired, the tuples can be translated back into the original dimensions with the decoder.

Figure 2: Representation of the coded data set

Conclusion

Autoencoders can be used for various purposes and are easy to create with SAP PAL. Splitting the model created by SAP PAL into encoders and decoders can be costly depending on the implementation, but can be handled as a one-time effort using appropriate SQL procedures. In the case of anomaly analysis, it is particularly important to emphasise how visually the analysis can be operated, since a two-dimensional representation of coded data is easy to implement. In the scenario we practically implemented, the data set used could be supplemented in two-dimensional form with tuples of a certain class (bouncing customers). After conversion with the decoder, the extended data set had then produced significantly improved results in the ML algorithm used for customer churn analysis. The added tuples, moreover, strongly resembled the existing tuples, which meant that the data set was not too heavily distorted by generated tuples.

Contact Person