August 2025

Introduction

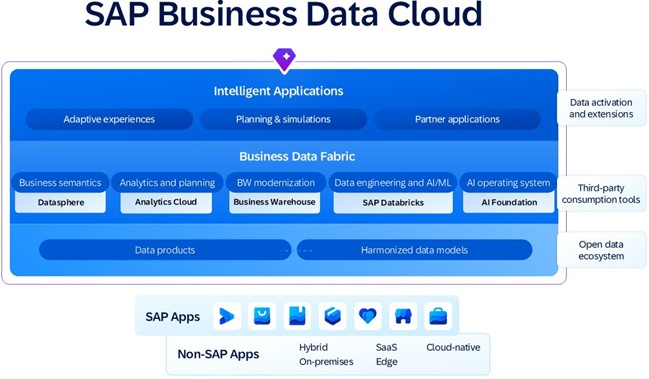

Quelle: https://www.sap.com/products/data-cloud.html

In this blog I would like to demonstrate how the conjunction of the three products allows us to bundle SAP data with a custom machine learning model output and visualize the results.

I am going to demonstrate an Ice Cream Demand Forecast scenario, in which actual transactional sales data is combined with a weather forecast made with a custom Predictive model.

The current blog is not intended to showcase a complex machine learning case or a Datasphere modeling best practice, rather than to demonstrate how interaction between the products looks like and how they complement each other. Hereby described business case is simplified, though the same concept can be used in cases of any complexity like customer behavior analysis, personalized chatbots creation, inventory management optimization, social media campaign analysis and many more.

In this blog I am going to describe how to::

- build a Predictive Linear Regression model in Databricks for weather prediction;

- share predicted results with Datasphere;

- build an Analytical Model in Datasphere, combining transactional data and predicted forecast received from Databricks;

- consume the Analytical Model in SAC and build a Demand Forecast Story.

Before we dive in, please note that I’m not using a BDC tenant. Datasphere and SAC will only be available within BDC starting from 1st Jan 2026, hence why my initial idea was to utilize the BDC-Trial. But unfortunately, my Trial tenant was limited enough to prevent me from creating custom Data Products and share them with Databricks. Creating custom notebooks in Databricks neither was possible.

My Datasphere and Databricks tenants reside in separate clouds and connected via JDBC. BDC in its turn uses Delta Share-Protokoll to exchange data between applications.

Nevertheless, we should keep in mind that Data Products are essentially datasets, and the only difference between this blog and BDC tenant is the data exchange protocol.

Contents

Business Scenario

Technical implementation

- Data model overview

- Building a linear regression model in Databricks

- Modelling in Datasphere

- SAC visualization

Summary

Business Scenario

Technical implementation

Data model overview

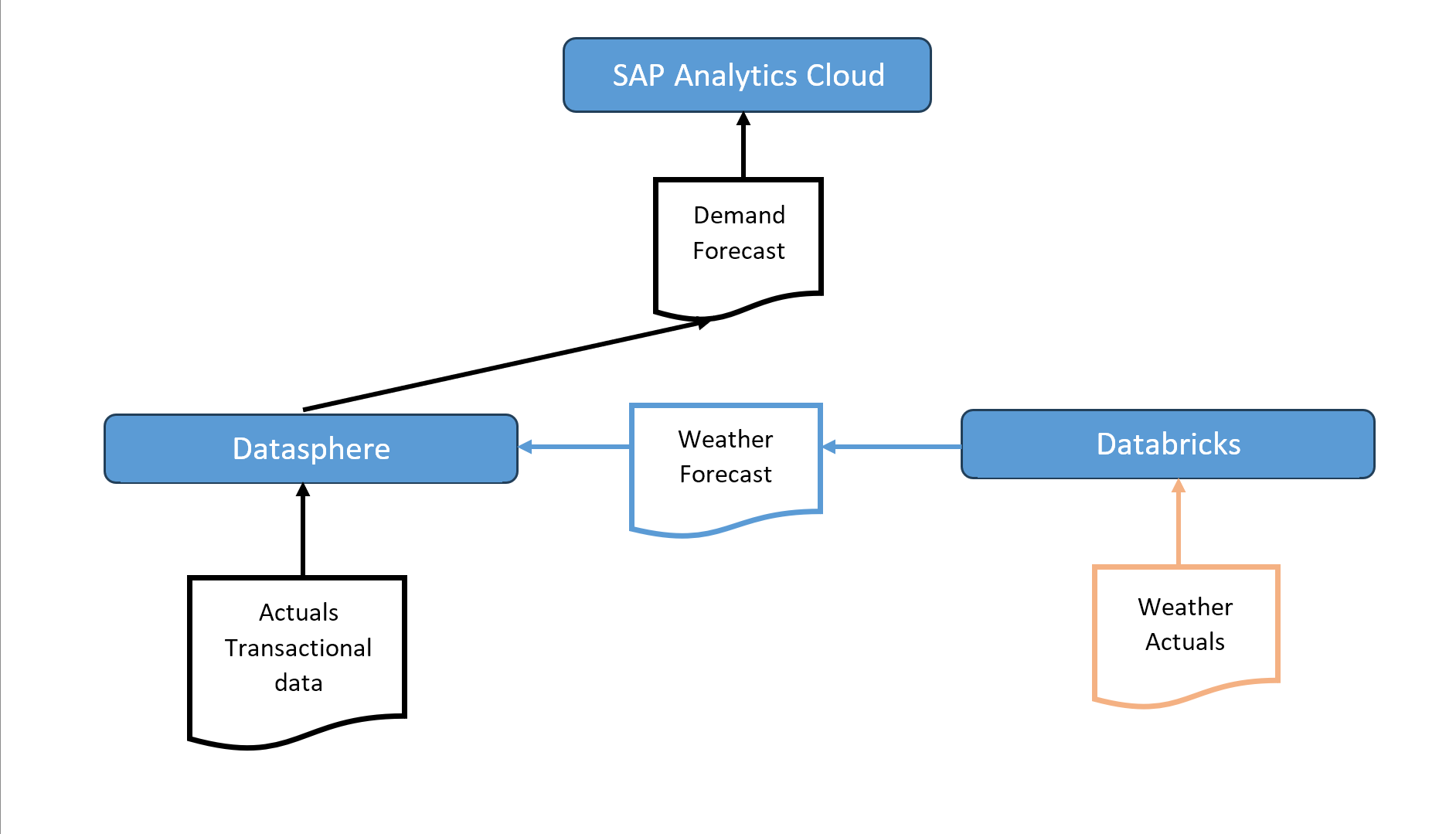

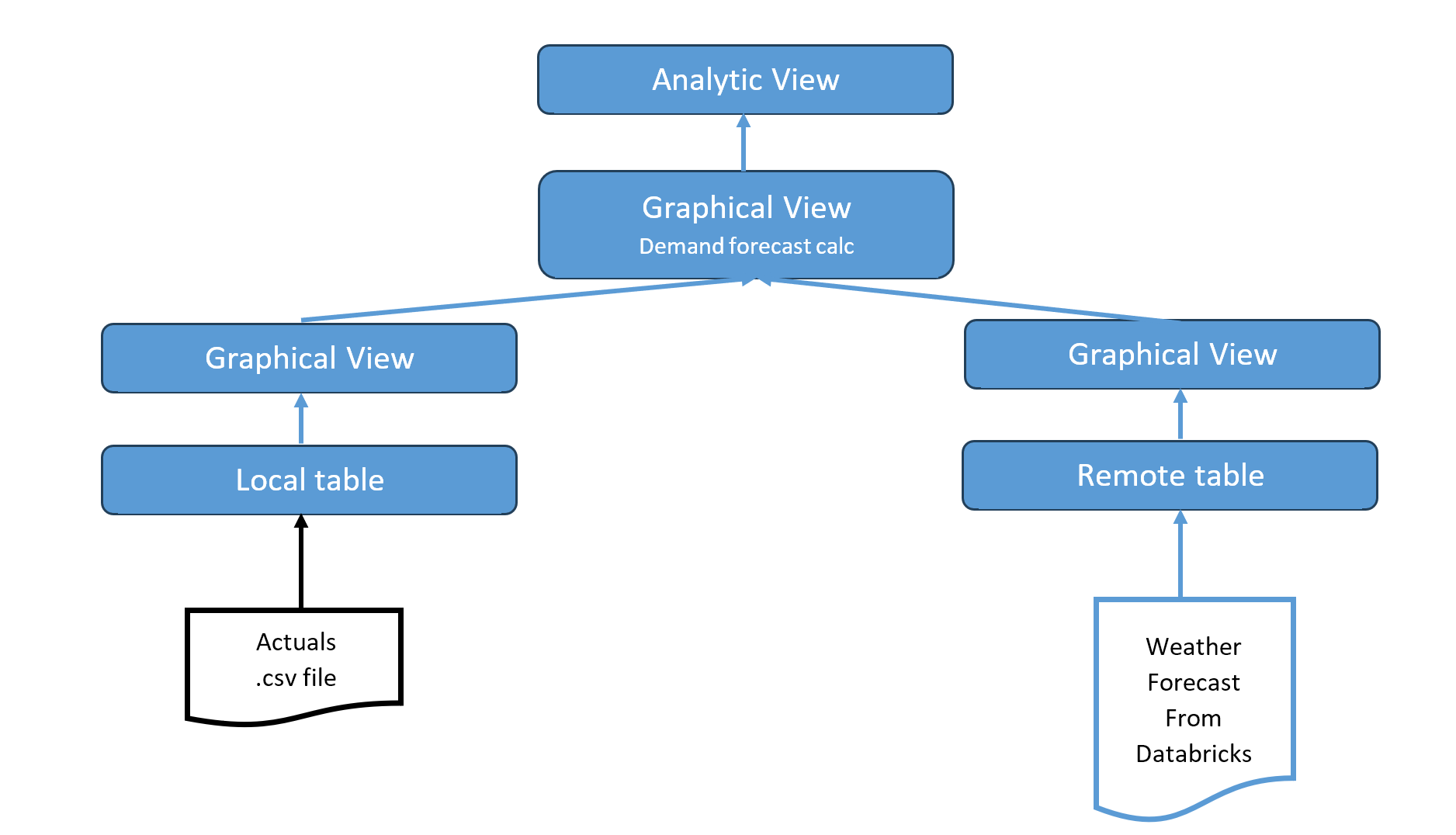

Actuals are loaded in Datasphere via a .csv file, which will be a data product in BDC tenant, coming from a transactional system, e.g S/4HANA cloud.

Weather actuals dataset comes from Zenodo.org directly into Databricks (in a real-life scenario it can be any other weather service).

Databricks receives weather actuals as a training dataset for the ML-model. After the model is trained, it produces a weather forecast for the next month. Then the forecast is sent to Datasphere for further modeling and calculations.

Datasphere receives weather forecast from Databricks and calculates Demand Forecast based on previous year’s sales actuals.

Created in Datasphere Analytic model then becomes a data source for an SAP Analytics Cloud story.

Complete Data flow is shown below:

Building a linear regression model in Databricks

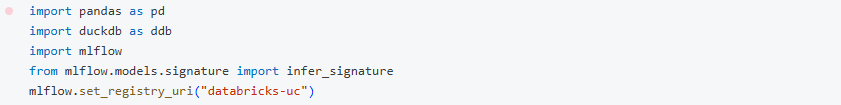

Below you can find a python script that I use to create a Linear Regression model for weather predictions.

Install required libraries:

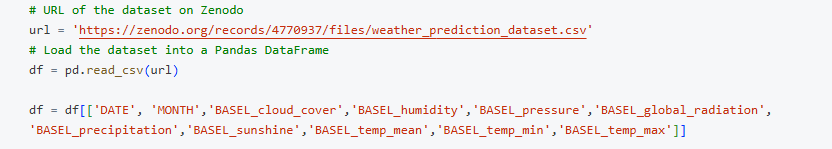

Load the dataset into a Pandas Data Frame. Out of all regions I only use Basel.

Dataset is taken from: https://zenodo.org/records/4770937/files/weather_prediction_dataset.csv

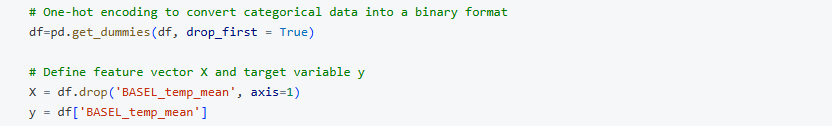

Perform one-hot encoding and define feature vector and target variable

Define training and testing sets and train the model

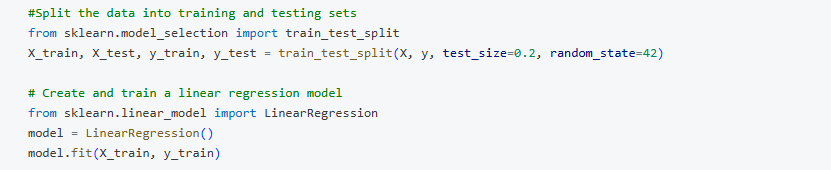

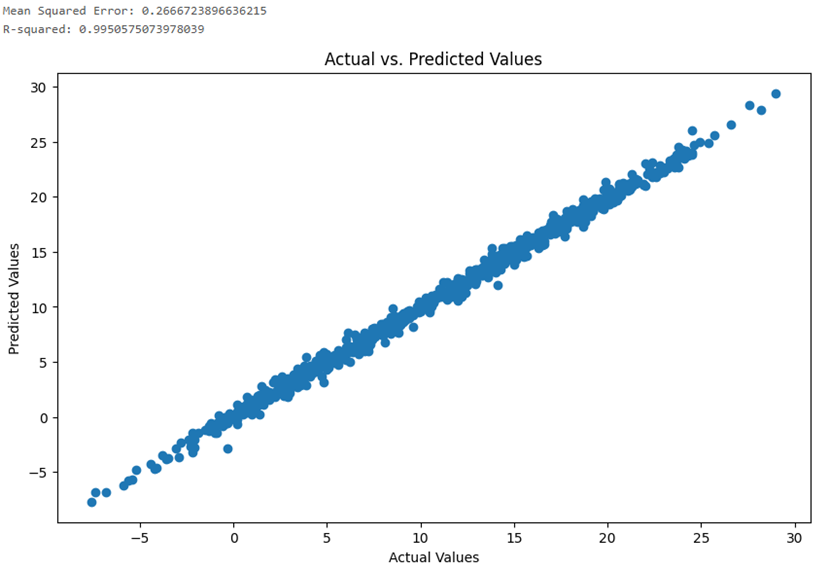

Evaluate the model with MSE and R-squared

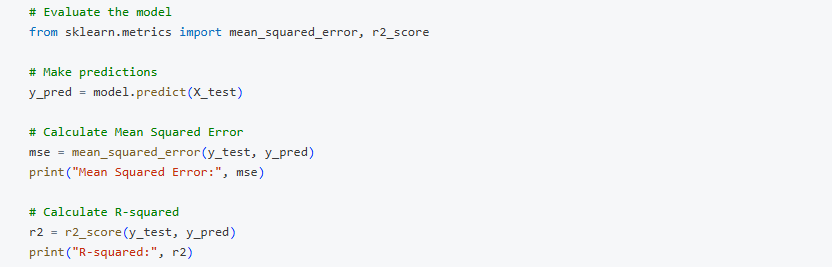

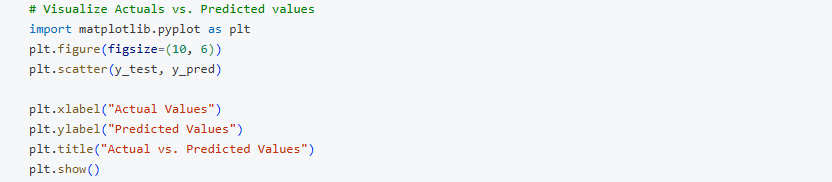

Visualize model accuracy

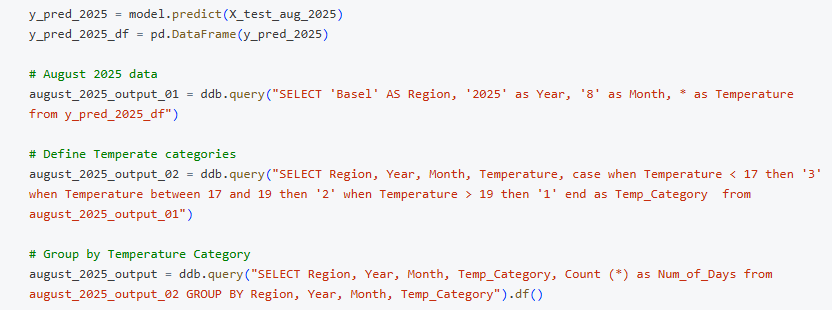

Now the pretrained model is ready to predict August 2025 temperatures.

"X_test_aug_2025" contains a feature vector;

"y_pred_2025" – predicted temperatures;

"August_2025_output" data frame contains output dataset with the number of days in each temperature category.

Defined categories:

"Cold" – lower than 17 degrees;

"Warm" – between 17 and 19 degrees;

"Hot" – higher than 20 degrees.

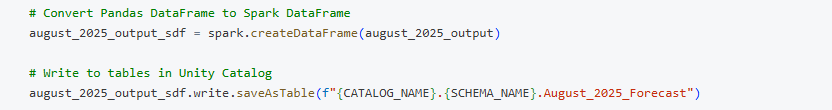

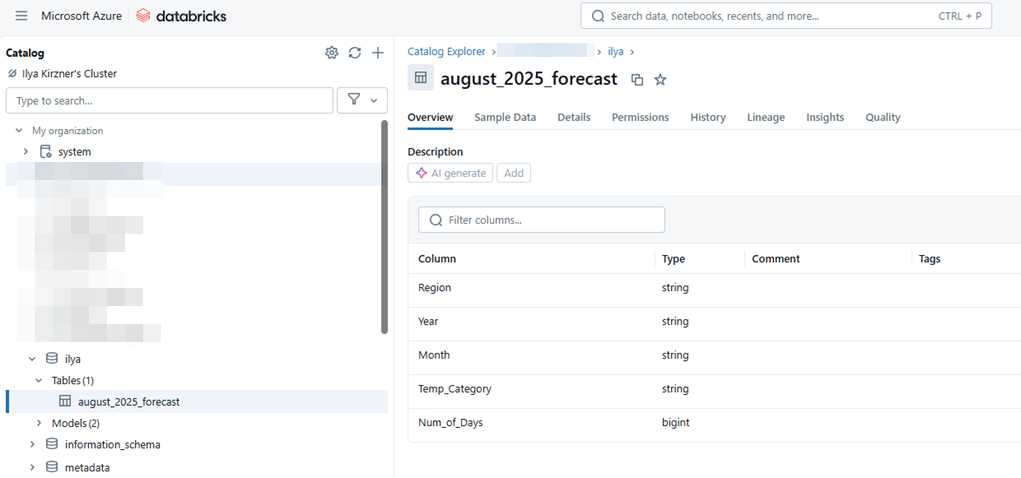

Convert Pandas data frame into Spark data frame and save the table in the Unity Catalog. The final table "August_2025_Forecast" contains Region, Year, Month, Temperature Category and expected Number of Days per each temperature category.

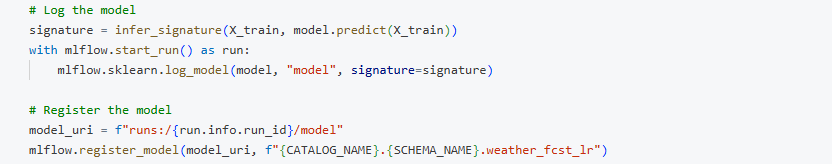

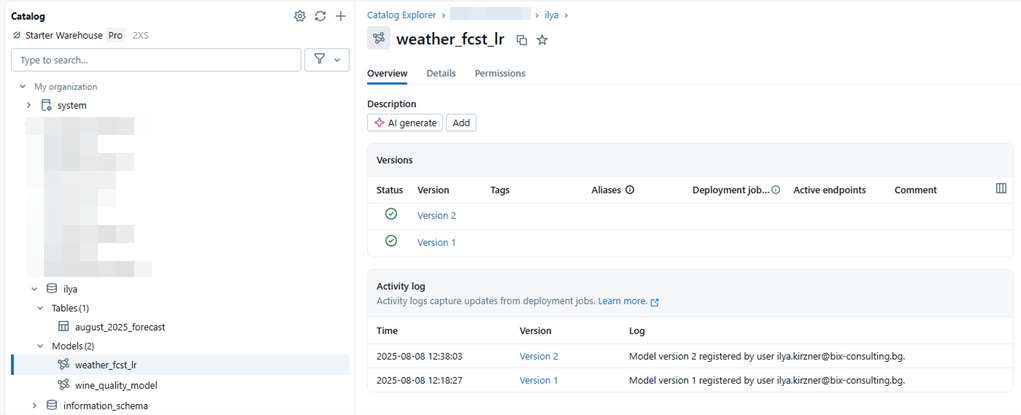

Log and register the model to be able to use it in the future.

The model "weather_fcst_lr" is saved in the Unity Catalog.

Modelling in Datasphere

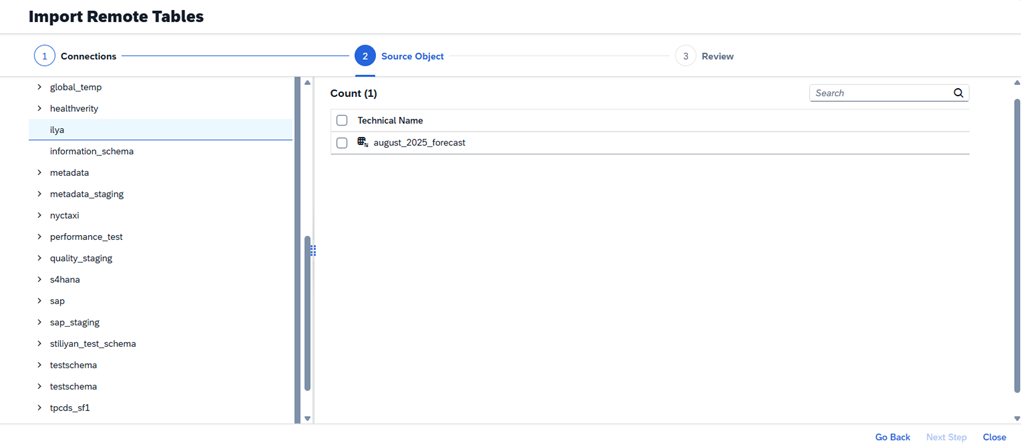

As the next step I’d like to pull the weather forecast from Databricks into Datasphere and bundle it with the transactional data.

Datasphere model is shown below:

Weather forecast generated in Databricks is pulled via a remote table in Datasphere using Generic JDBC connection:

Check out this Blog showing steps to establish JDBC connection between Datasphere and Databricks.

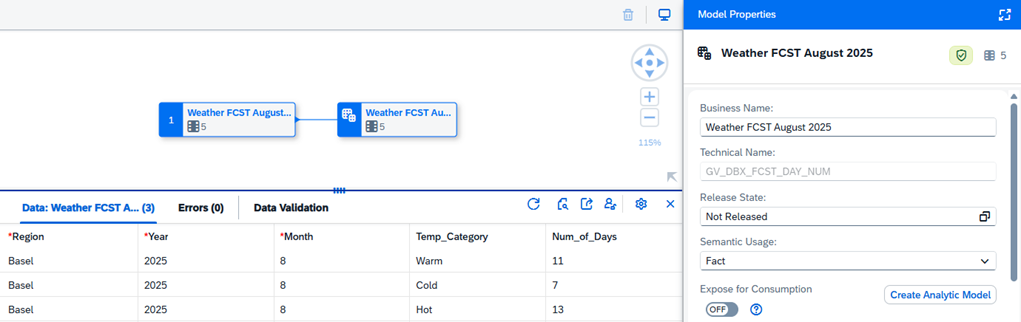

A Graphical View "GV_DBX_FCST_DAY_NUM" is built on top of the remote table:

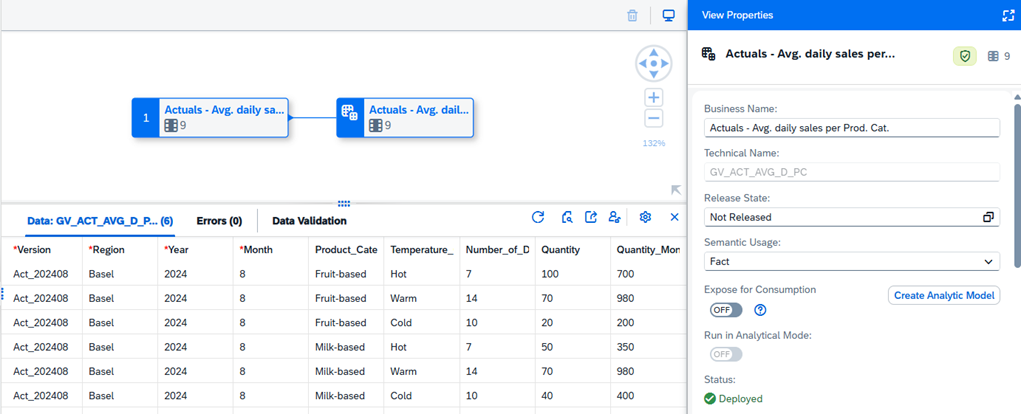

Graphical View "GV_ACT_AVG_D_PC" is built on a local table which contains previous year average daily sales quantity per product category respectively to each Temperature category. You can see the data preview below:

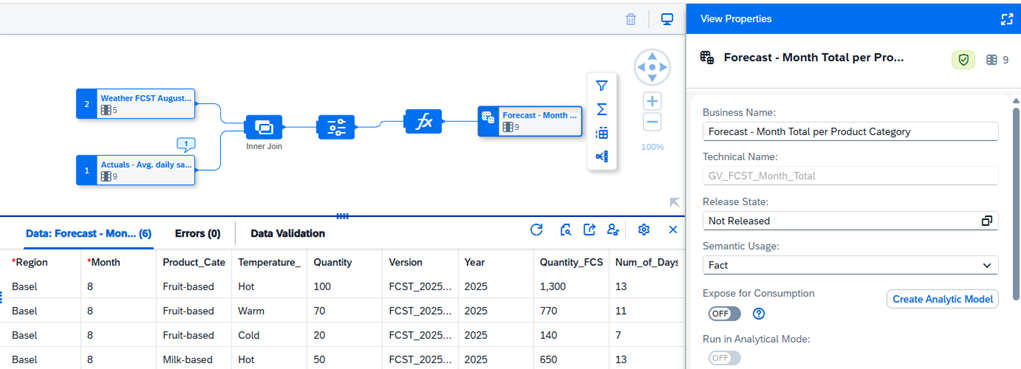

Graphical view "GV_FCST_QUANT" contains the next month Demand forecast ("Quantity_FCST") calculation.

August 2024 average daily sales quantity is taken for the current year calculation and multiplied with the Number of Days ("Num_of_Days") in respective Temperature category:

Previous year’s Actuals with the current year’s forecast are combined in the view "GV_FCST_Month_Total".

In the data preview below you can see the "Quantity" column representing daily quantity sold actuals, also taken for the current year forecast calculation. And the "Quantity_FCST" column, calculated as "Quanity" * "Number of days", representing the current year monthly quantity forecast. It is important to mark measures in the view output.

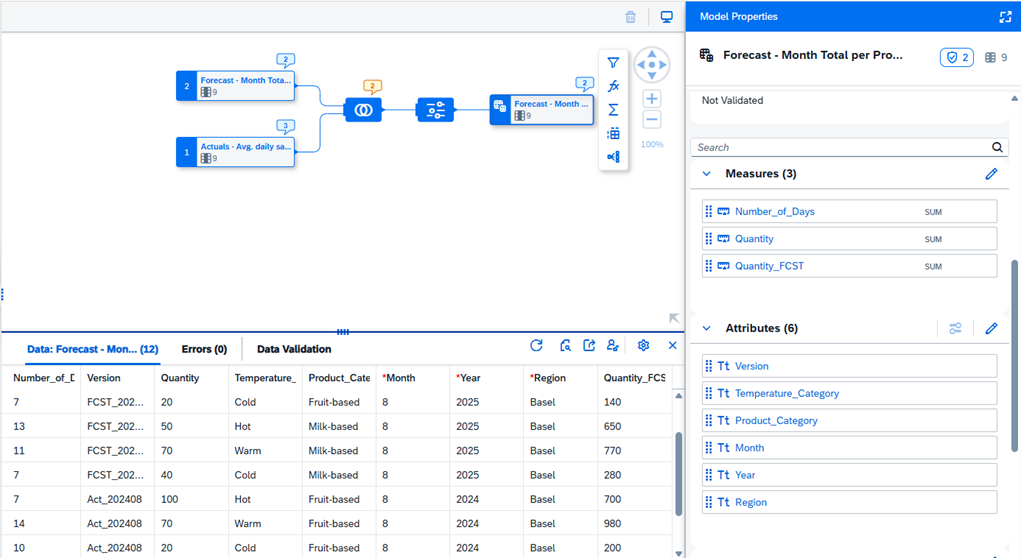

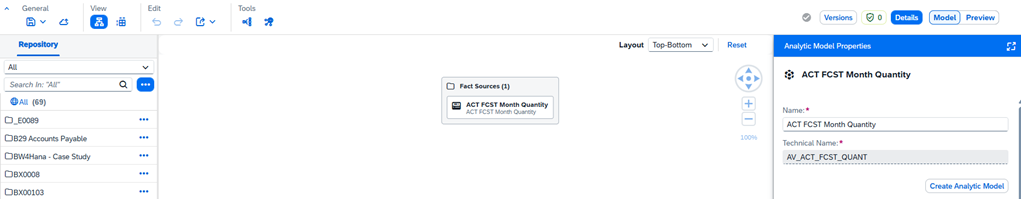

Analytic Model "AV_ACT_FCST_QUANT" is created on top of the graphical view "GV_FCST_Month_Total" for further consumption in SAC:

SAC Visualization

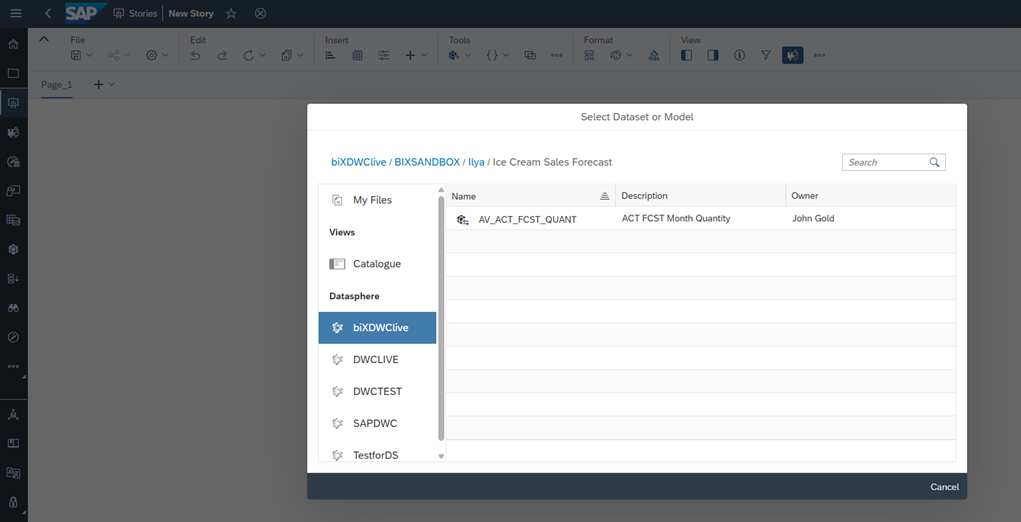

Analytic model "AM_ACT_FCST_QUANT" is used in the SAC story directly as a data source. The model is available via the SAC-Datasphere connection:

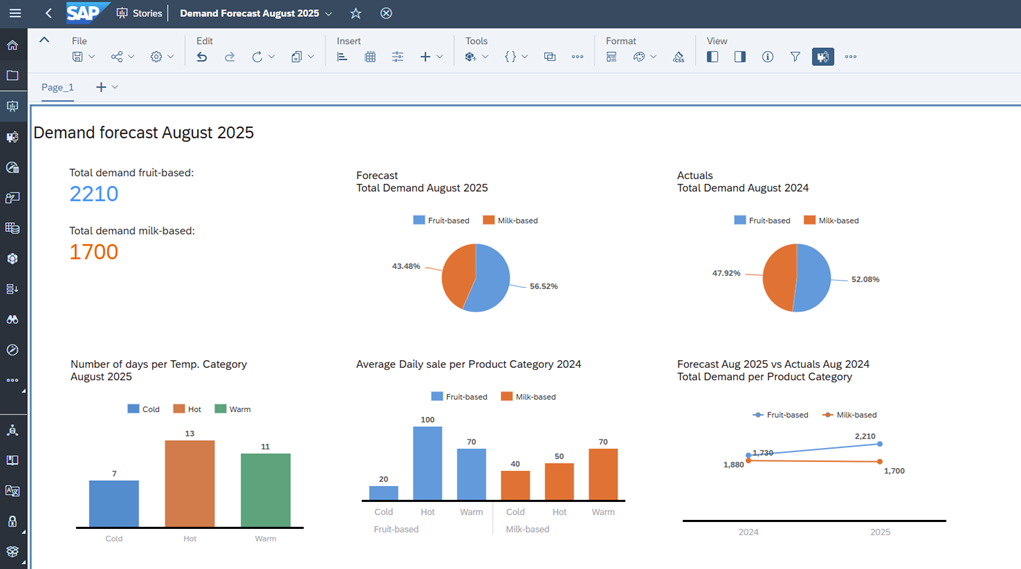

The Story below contains visualizations built upon the Analytic model:

- total demands per product category

- number of days per each temperature category

- previous year’s average daily sale per product category

- forecast vs actuals comparison

Summary

In this blog I have shown how SAP Datasphere, SAP Analytics Cloud and Databricks can work together and allow to bundle corporate data with an AI-model output. Even though the use case is simplified, the same concept can be used in cases of any complexity like customer behavior analysis, personalized chatbots creation, inventory management optimization, social media campaign analysis and many more.

I have demonstrated how to:

- build a Predictive Linear Regression model in Databricks for weather prediction

- share predicted results with Datasphere

- build an Analytical Model in Datasphere, combining transactional data and predicted forecast received from Databricks

- consume the Analytical Model in SAC and build a Demand Forecast Story

Stay tuned for more articles on Business Data Cloud, Databricks and business AI on how these applications are changing the way we interact with corporate data!

If you are interested in further discovering SAP Business Data Cloud, you might like to watch the webinar on the topic, prepared by the biX Consulting team: https://www.bix-consulting.com/en/sap-business-data-cloud/